Median

From Wikipedia, the free encyclopedia

This article is about the statistical concept. For other uses, see Median (disambiguation).

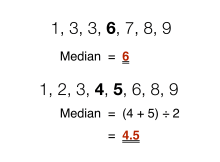

The median is the value separating the higher half of a data sample, a population, or a probability distribution, from the lower half. In simple terms, it may be thought of as the "middle" value of a data set. For example, in the data set {1, 3, 3, 6, 7, 8, 9}, the median is 6, the fourth number in the sample. The median is a commonly used measure of the properties of a data set in statistics and probability theory.

The basic advantage of the median in describing data compared to the mean (often simply described as the "average") is that it is not skewed so much by extremely large or small values, and so it may give a better idea of a 'typical' value. For example, in understanding statistics like household income or assets which vary greatly, a mean may be skewed by a small number of extremely high or low values. Median income, for example, may be a better way to suggest what a 'typical' income is.

Because of this, the median is of central importance in robust statistics, as it is the most resistant statistic, having a breakdown point of 50%: so long as no more than half the data are contaminated, the median will not give an arbitrarily large or small result.

Contents

[hide]Basic procedure[edit]

The median of a finite list of numbers can be found by arranging all the numbers from smallest to greatest.

If there is an odd number of numbers, the middle one is picked. For example, consider the set of numbers:

- 1, 3, 3, 6, 7, 8, 9

This set contains seven numbers. The median is the fourth of them, which is 6.

If there are an even number of observations, then there is no single middle value; the median is then usually defined to be the mean of the two middle values.[1][2] For example, in the data set:

- 1, 2, 3, 4, 5, 6, 8, 9

The median is the mean of the middle two numbers: this is (4 + 5) ÷ 2, which is 4.5. (In more technical terms, this interprets the median as the fully trimmed mid-range.)

The formula used to find the middle number of a data set of n numbers is (n + 1) ÷ 2. This either gives the middle number (for an odd number of values) or the halfway point between the two middle values. For example, with 14 values, the formula will give 7.5, and the median will be taken by averaging the seventh and eighth values.

You will also be able to find the median using the Stem-and-Leaf Plot.

There is no widely accepted standard notation for the median, but some authors represent the median of a variable x either as x͂ or as μ1/2[1] sometimes also M.[3][4] In any of these cases, the use of these or other symbols for the median needs to be explicitly defined when they are introduced.

Discussion[edit]

The median is used primarily for skewed distributions, which it summarizes differently from the arithmetic mean. Consider the multiset { 1, 2, 2, 2, 3, 14 }. The median is 2 in this case, (as is the mode), and it might be seen as a better indication of central tendency (less susceptible to the exceptionally large value in data) than the arithmetic mean of 4.

Calculation of medians is a popular technique in summary statistics and summarizing statistical data, since it is simple to understand and easy to calculate, while also giving a measure that is more robust in the presence of outlier values than is the mean. The widely cited empirical relationship between the relative locations of the mean and the median for skewed distributions is, however, not generally true.[5] There are, however, various relationships for the absolute difference between them; see below.

The median does not identify a specific value within the data set, since more than one value can be at the median level and with an even number of observations (as shown above) no value need be exactly at the value of the median. Nonetheless, the value of the median is uniquely determined with the usual definition. A related concept, in which the outcome is forced to correspond to a member of the sample, is the medoid.

In a population, at most half have values strictly less than the median and at most half have values strictly greater than it. If each group contains less than half the population, then some of the population is exactly equal to the median. For example, if a < b < c, then the median of the list {a, b, c} is b, and, if a < b < c < d, then the median of the list {a, b, c, d} is the mean of b and c; i.e., it is (b + c)/2. Indeed, as it is based on the middle data in a group, it is not necessary to even know the value of extreme results in order to calculate a median. For example, in a psychology test investigating the time needed to solve a problem, if a small number of people failed to solve the problem at all in the given time a median can still be calculated.[6]

The median can be used as a measure of location when a distribution is skewed, when end-values are not known, or when one requires reduced importance to be attached to outliers, e.g., because they may be measurement errors.

A median is only defined on ordered one-dimensional data, and is independent of any distance metric. A geometric median, on the other hand, is defined in any number of dimensions.

The median is one of a number of ways of summarising the typical values associated with members of a statistical population; thus, it is a possible location parameter. The median is the 2nd quartile, 5th decile, and 50th percentile. Since the median is the same as the second quartile, its calculation is illustrated in the article on quartiles. A median can be worked out for ranked but not numerical classes (e.g. working out a median grade when students are graded from A to F), although the result might be halfway between grades if there are an even number of cases.

When the median is used as a location parameter in descriptive statistics, there are several choices for a measure of variability: the range, the interquartile range, the mean absolute deviation, and the median absolute deviation.

For practical purposes, different measures of location and dispersion are often compared on the basis of how well the corresponding population values can be estimated from a sample of data. The median, estimated using the sample median, has good properties in this regard. While it is not usually optimal if a given population distribution is assumed, its properties are always reasonably good. For example, a comparison of the efficiency of candidate estimators shows that the sample mean is more statistically efficient than the sample median when data are uncontaminated by data from heavy-tailed distributions or from mixtures of distributions, but less efficient otherwise, and that the efficiency of the sample median is higher than that for a wide range of distributions. More specifically, the median has a 64% efficiency compared to the minimum-variance mean (for large normal samples), which is to say the variance of the median will be ~50% greater than the variance of the mean—see asymptotic efficiency and references therein.

Probability distributions[edit]

For any probability distribution on the real line R with cumulative distribution function F, regardless of whether it is any kind of continuous probability distribution, in particular an absolutely continuous distribution (which has a probability density function), or a discrete probability distribution, a median is by definition any real number m that satisfies the inequalities

or, equivalently, the inequalities

in which a Lebesgue–Stieltjes integral is used. For an absolutely continuous probability distribution with probability density function ƒ, the median satisfies

Any probability distribution on R has at least one median, but there may be more than one median. Where exactly one median exists, statisticians speak of "the median" correctly; even when the median is not unique, some statisticians speak of "the median" informally.

Medians of particular distributions[edit]

The medians of certain types of distributions can be easily calculated from their parameters; furthermore, they exist even for some distributions lacking a well-defined mean, such as the Cauchy distribution:

- The median of a symmetric distribution which possesses a mean μ also takes the value μ.

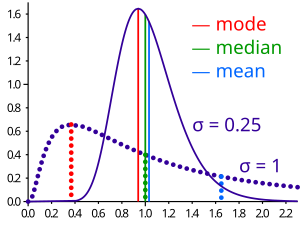

- The median of a normal distribution with mean μ and variance σ2 is μ. In fact, for a normal distribution, mean = median = mode.

- The median of a uniform distribution in the interval [a, b] is (a + b) / 2, which is also the mean.

- The median of a Cauchy distribution with location parameter x0 and scale parameter y is x0, the location parameter.

- The median of a power law distribution x−a, with exponent a > 1 is 21/(a − 1)xmin, where xmin is the minimum value for which the power law holds[8]

- The median of an exponential distribution with rate parameter λ is the natural logarithm of 2 divided by the rate parameter: λ−1ln 2.

- The median of a Weibull distribution with shape parameter k and scale parameter λ is λ(ln 2)1/k.

Populations[edit]

Optimality property[edit]

The mean absolute error of a real variable c with respect to the random variable X is

Provided that the probability distribution of X is such that the above expectation exists, then m is a median of X if and only if m is a minimizer of the mean absolute error with respect to X.[9] In particular, m is a sample median if and only if m minimizes the arithmetic mean of the absolute deviations.

More generally, a median is defined as a minimum of

- ,

as discussed below in the section on multivariate medians (specifically, the spatial median).

This optimization-based definition of the median is useful in statistical data-analysis, for example, in k-medians clustering.

Unimodal distributions[edit]

It can be shown for a unimodal distribution that the median and the mean lie within (3/5)1/2 ≈ 0.7746 standard deviations of each other.[10] In symbols,

where |.| is the absolute value.

A similar relation holds between the median and the mode: they lie within 31/2 ≈ 1.732 standard deviations of each other:

Inequality relating means and medians[edit]

If the distribution has finite variance, then the distance between the median and the mean is bounded by one standard deviation.

This bound was proved by Mallows,[11] who used Jensen's inequality twice, as follows. We have

The first and third inequalities come from Jensen's inequality applied to the absolute-value function and the square function, which are each convex. The second inequality comes from the fact that a median minimizes the absolute deviation function

This proof can easily be generalized to obtain a multivariate version of the inequality,[12] as follows:

where m is a spatial median, that is, a minimizer of the function The spatial median is unique when the data-set's dimension is two or more.[13][14] An alternative proof uses the one-sided Chebyshev inequality; it appears in an inequality on location and scale parameters.

Jensen's inequality for medians[edit]

Jensen's inequality states that for any random variable x with a finite expectation E(x) and for any convex function f

It has been shown[15] that if x is a real variable with a unique median m and f is a C function then

A C function is a real valued function, defined on the set of real numbers R, with the property that for any real t

Medians for samples[edit]

The sample median[edit]

Efficient computation of the sample median[edit]

Even though comparison-sorting n items requires Ω(n log n) operations, selection algorithms can compute the k'th-smallest of n items with only Θ(n) operations. This includes the median, which is the n2'th order statistic (or for an even number of samples, the average of the two middle order statistics).

Selection algorithms still have the downside of requiring Ω(n) memory, that is, they need to have the full sample (or a linear-sized portion of it) in memory. Because this, as well as the linear time requirement, can be prohibitive, several estimation procedures for the median have been developed. A simple one is the median of three rule, which estimates the median as the median of a three-element subsample; this is commonly used as a subroutine in the quicksort sorting algorithm, which uses an estimate of its input's median. A more robust estimator is Tukey's ninther, which is the median of three rule applied with limited recursion:[16] if A is the sample laid out as an array, and

- med3(A) = median(A[1], A[n2], A[n]),

then

- ninther(A) = med3(med3(A[1 ... 13n]), med3(A[13n ... 23n]), med3(A[23n ... n]))

The remedian is an estimator for the median that requires linear time but sub-linear memory, operating in a single pass over the sample.[17]

Easy explanation of the sample median[edit]

In individual series (if number of observation is very low) first one must arrange all the observations in order. Then count(n) is the total number of observation in given data.

If n is odd then Median (M) = value of ((n + 1)/2)th item term.

If n is even then Median (M) = value of [((n)/2)th item term + ((n)/2 + 1)th item term ]/2

- For an odd number of values

As an example, we will calculate the sample median for the following set of observations: 1, 5, 2, 8, 7.

Start by sorting the values: 1, 2, 5, 7, 8.

In this case, the median is 5 since it is the middle observation in the ordered list.

The median is the ((n + 1)/2)th item, where n is the number of values. For example, for the list {1, 2, 5, 7, 8}, we have n = 5, so the median is the ((5 + 1)/2)th item.

- median = (6/2)th item

- median = 3rd item

- median = 5

- For an even number of values

As an example, we will calculate the sample median for the following set of observations: 1, 6, 2, 8, 7, 2.

Start by sorting the values: 1, 2, 2, 6, 7, 8.

In this case, the arithmetic mean of the two middlemost terms is (2 + 6)/2 = 4. Therefore, the median is 4 since it is the arithmetic mean of the middle observations in the ordered list.

We also use this formula MEDIAN = {(n + 1 )/2}th item . n = number of values

As above example 1, 2, 2, 6, 7, 8 n = 6 Median = {(6 + 1)/2}th item = 3.5th item. In this case, the median is average of the 3rd number and the next one (the fourth number). The median is (2 + 6)/2 which is 4.

Sampling distribution[edit]

The distribution of both the sample mean and the sample median were determined by Laplace.[18] The distribution of the sample median from a population with a density function is asymptotically normal with mean and variance[19]

where is the median value of distribution and is the sample size. In practice by definition

These results have also been extended.[20] It is now known for the -th quantile that the distribution of the sample -th quantile is asymptotically normal around the -th quantile with variance equal to

where is the value of the distribution density at the -th quantile.

In the case of a discrete variable, the sampling distribution of the median for small-samples can be investigated as follows. We take the sample size to be an odd number . If a given value is to be the median of the sample then two conditions must be satisfied. The first is that at most observations can have a value of or less. The second is that at least observations must have a value of or less. Let be the number of observations which have a value of or less and let be the number of observations which have a value of exactly . Then has a minimum value of 0 and a maximum of while has a minimum value of (to “fill up” the number of observations to at least ) and a maximum of (to “fill up” the number of observations to ). If an observation has a value below , it is not relevant how far below it is and conversely, if an observation has a value above , it is not relevant how far above it is. We can therefore represent the observations as following a trinomial distribution with probabilities , and . The probability that the median will have a value is then given by

Summing this over all values of defines a proper distribution and gives a unit sum. In practice, the function will often not be known but it can be estimated from an observed frequency distribution. An example is given in the following table where the actual distribution is not known but a sample of 3,800 observations allows a sufficiently accurate assessment of .

| v | 0 | 0.5 | 1 | 1.5 | 2 | 2.5 | 3 | 3.5 | 4 | 4.5 | 5 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| f(v) | 0.000 | 0.008 | 0.010 | 0.013 | 0.083 | 0.108 | 0.328 | 0.220 | 0.202 | 0.023 | 0.005 |

| F(v) | 0.000 | 0.008 | 0.018 | 0.031 | 0.114 | 0.222 | 0.550 | 0.770 | 0.972 | 0.995 | 1.000 |

Using these data it is possible to investigate the effect of sample size on the standard errors of the mean and median. The observed mean is 3.16, the observed raw median is 3 and the observed interpolated median is 3.174. The following table gives some comparison statistics. The standard error of the median is given both from the above expression for and from the asymptotic approximation given earlier.

Sample size

Statistic

| 3 | 9 | 15 | 21 |

|---|---|---|---|---|

| Expected value of median | 3.198 | 3.191 | 3.174 | 3.161 |

| Standard error of median (above formula) | 0.482 | 0.305 | 0.257 | 0.239 |

| Standard error of median (asymptotic approximation) | 0.879 | 0.508 | 0.393 | 0.332 |

| Standard error of mean | 0.421 | 0.243 | 0.188 | 0.159 |

The expected value of the median falls slightly as sample size increases while, as would be expected, the standard errors of both the median and the mean are proportionate to the inverse square root of the sample size. The asymptotic approximation errs on the side of caution by overestimating the standard error.

In the case of a continuous variable, the following argument can be used. If a given value is to be the median, then one observation must take the value . The elemental probability of this is . Then, of the remaining observations, exactly of them must be above and the remaining below. The probability of this is the th term of a binomial distribution with parameters and . Finally we multiply by since any of the observations in the sample can be the median observation. Hence the elemental probability of the median at the point is given by

Now we introduce the beta function. For integer arguments and , this can be expressed as . Also, we note that . Using these relationships and setting both and equal to allows the last expression to be written as

Hence the density function of the median is a symmetric beta distribution over the unit interval which supports . Its mean, as we would expect, is 0.5 and its standard deviation (which is the standard error of the sample median) is . However this finding can only be used if (i) is known or can be assumed (ii) can be integrated to find and (iii) can in turn be inverted. This will not always be the case and even when it is, the cut-off points for can be calculated directly without recourse to the distribution of the median on the unit interval. So while interesting in theory, this result is not much use in practice.

- Estimation of variance from sample data

The value of —the asymptotic value of where is the population median—has been studied by several authors. The standard 'delete one' jackknifemethod produces inconsistent results.[21] An alternative—the 'delete k' method—where grows with the sample size has been shown to be asymptotically consistent.[22] This method may be computationally expensive for large data sets. A bootstrap estimate is known to be consistent,[23] but converges very slowly (order of ).[24] Other methods have been proposed but their behavior may differ between large and small samples.[25]

- Efficiency

The efficiency of the sample median, measured as the ratio of the variance of the mean to the variance of the median, depends on the sample size and on the underlying population distribution. For a sample of size from the normal distribution, the ratio is[26]

For large samples (as tends to infinity) this ratio tends to

Other estimators[edit]

For univariate distributions that are symmetric about one median, the Hodges–Lehmann estimator is a robust and highly efficient estimator of the population median.[27]

If data are represented by a statistical model specifying a particular family of probability distributions, then estimates of the median can be obtained by fitting that family of probability distributions to the data and calculating the theoretical median of the fitted distribution.[citation needed] Pareto interpolation is an application of this when the population is assumed to have a Pareto distribution.

Coefficient of dispersion[edit]

The coefficient of dispersion (CD) is defined as the ratio of the average absolute deviation from the median to the median of the data.[28] It is a statistical measure used by the states of Iowa, New York and South Dakota in estimating dues taxes.[29][30][31] In symbols

where n is the sample size, m is the sample median and x is a variate. The sum is taken over the whole sample.

Confidence intervals for a two sample test where the sample sizes are large have been derived by Bonett and Seier[28] This test assumes that both samples have the same median but differ in the dispersion around it. The confidence interval (CI) is bounded inferiorly by

where tj is the mean absolute deviation of the jth sample, var() is the variance and zα is the value from the normal distribution for the chosen value of α: for α = 0.05, zα = 1.96. The following formulae are used in the derivation of these confidence intervals

where r is the Pearson correlation coefficient between the squared deviation scores

- and

a and b here are constants equal to 1 and 2, x is a variate and s is the standard deviation of the sample.

Multivariate median[edit]

Previously, this article discussed the univariate median, when the sample or population had one-dimension. When the dimension is two or higher, there are multiple concepts that extend the definition of the univariate median; each such multivariate median agrees with the univariate median when the dimension is exactly one.[27][32][33][34]

Marginal median[edit]

The marginal median is defined for vectors defined with respect to a fixed set of coordinates. A marginal median is defined to be the vector whose components are univariate medians. The marginal median is easy to compute, and its properties were studied by Puri and Sen.[27][35]

Spatial median[edit]

For N vectors in a normed vector space, a spatial median minimizes the average distance

where xn and a are vectors. The spatial median is unique when the data-set's dimension is two or more and the norm is the Euclidean norm (or another strictly convexnorm).[13][14][27] The spatial median is also called the L1 median, even when the norm is Euclidean. Other names are used especially for finite sets of points: geometric median, Fermat point (in mechanics), or Weber or Fermat-Weber point (in geographical location theory).[36]

More generally, a spatial median is defined as a minimizer of

this general definition is convenient for defining a spatial median of a population in a finite-dimensional normed space, for example, for distributions without a finite mean.[13][27]Spatial medians are defined for random vectors with values in a Banach space.[13]

The spatial median is a robust and highly efficient estimator of a central tendency of a population.[27][37][38][39]

Other multivariate medians[edit]

An alternative generalization of the spatial median in higher dimensions that does not relate to a particular metric is the centerpoint.

[edit]

Interpolated median[edit]

When dealing with a discrete variable, it is sometimes useful to regard the observed values as being midpoints of underlying continuous intervals. An example of this is a Likert scale where opinions or preferences are expressed on a scale with a set number of possible responses. If the scale consists of the positive integers, an observation of 3 might be regarded as representing the interval from 2.50 to 3.50. It is possible to estimate the median of the underlying variable. If say 22% of the observations are of value 2 or below and 55.0% are of 3 or below (so 33% have the value 3), then the median is 3 since the median is the smallest value of for which is greater than a half. But the interpolated median is somewhere between 2.50 and 3.50. First we add half of the interval width to the median to get the upper bound of the median interval. Then we subtract that proportion of the interval width which equals the proportion of the 33% which lies above the 50% mark. In other words, we split up the interval width pro rata to the numbers of observations. In this case, the 33% is split into 28% below the median and 5% above it so we subtract 5/33 of the interval width from the upper bound of 3.50 to give an interpolated median of 3.35. More formally, the interpolated median can be calculated from

![\int _{(-\infty ,m]}dF(x)\geq {\frac {1}{2}}{\text{ and }}\int _{[m,\infty )}dF(x)\geq {\frac {1}{2}}\,\!](https://wikimedia.org/api/rest_v1/media/math/render/svg/3b1548bab9810d35c35ad9f9d18620945fc6e702)

,

, and the mean

and the mean  lie within (3/5)1/2 ≈ 0.7746 standard deviations of each other.

lie within (3/5)1/2 ≈ 0.7746 standard deviations of each other.

The spatial median is unique when the data-set's dimension is two or more.

The spatial median is unique when the data-set's dimension is two or more.

![f^{-1}((-\infty ,t])=\{x\in R\mid f(x)\leq t\}](https://wikimedia.org/api/rest_v1/media/math/render/svg/a856e2122838aada01c894674c8be2534b8771b9)

is asymptotically normal with mean

is asymptotically normal with mean  and variance

and variance

is the sample size. In practice

is the sample size. In practice  by definition

by definition -th quantile that the distribution of the sample

-th quantile that the distribution of the sample

is the value of the distribution density at the

is the value of the distribution density at the  . If a given value

. If a given value  is to be the median of the sample then two conditions must be satisfied. The first is that at most

is to be the median of the sample then two conditions must be satisfied. The first is that at most  or less. The second is that at least

or less. The second is that at least  observations must have a value of

observations must have a value of  be the number of observations which have a value of

be the number of observations which have a value of  be the number of observations which have a value of exactly

be the number of observations which have a value of exactly  (to “fill up” the number of observations to at least

(to “fill up” the number of observations to at least  (to “fill up” the number of observations to

(to “fill up” the number of observations to  ). If an observation has a value below

). If an observation has a value below  ,

,  and

and  . The probability that the median

. The probability that the median ![{\displaystyle \Pr(m=v)=\sum _{i=0}^{n}\sum _{j=n+1-i}^{2n+1-i}{\frac {(2n+1)!}{i!j!(2n+1-i-j)!}}[F(v-1)]^{i}[f(v)]^{j}[1-F(v)]^{2n+1-i-j}.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/29688e3c44423b2867da4c7fa26b964b3083d554)

and from the asymptotic approximation given earlier.

and from the asymptotic approximation given earlier. . Then, of the remaining

. Then, of the remaining  observations, exactly

observations, exactly  and

and ![{\displaystyle f(v){\frac {(2n)!}{n!n!}}[F(v)]^{n}[1-F(v)]^{n}(2n+1)\,dv.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/0336011e24a172097f7c8a9d6f8c9f0197fe0ab1)

and

and  , this can be expressed as

, this can be expressed as  . Also, we note that

. Also, we note that  . Using these relationships and setting both

. Using these relationships and setting both  allows the last expression to be written as

allows the last expression to be written as![{\displaystyle {\frac {[F(v)]^{n}[1-F(v)]^{n}}{\mathrm {B} (n+1,n+1)}}\,dF(v)}](https://wikimedia.org/api/rest_v1/media/math/render/svg/bb7956c7a9a5762e41a5f4c481b272a41783c40e)

. However this finding can only be used if (i)

. However this finding can only be used if (i)  —the asymptotic value of

—the asymptotic value of  where

where  is the population median—has been studied by several authors. The standard 'delete one'

is the population median—has been studied by several authors. The standard 'delete one'  grows with the sample size has been shown to be asymptotically consistent.

grows with the sample size has been shown to be asymptotically consistent. ).

).

![\exp \left[\log \left({\frac {t_{a}}{t_{b}}}\right)-z_{\alpha }\left(\operatorname {var} \left[\log \left({\frac {t_{a}}{t_{b}}}\right)\right]\right)^{0.5}\right]](https://wikimedia.org/api/rest_v1/media/math/render/svg/3eef8fb061ec03288473ff8d0cf288ec640b4f75)

![\operatorname {var} [\log(t_{a})]={\frac {\left({\frac {s_{a}^{2}}{t_{a}^{2}}}+\left({\frac {x_{a}-{\bar {x}}}{t_{a}}}\right)^{2}-1\right)}{n}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/f6ebe207a913fe43de7673dd8078f35e1e5f8937)

![\operatorname {var} \left[\log \left({\frac {t_{a}}{t_{b}}}\right)\right]=\operatorname {var} [\log(t_{a})]+\operatorname {var} [\log(t_{b})]-2r(\operatorname {var} [\log(t_{a})]\operatorname {var} [\log(t_{b})])^{0.5}](https://wikimedia.org/api/rest_v1/media/math/render/svg/fd02ef99500b4437503113b6e10b73f1158f64c5)

and

and

;

; for which

for which  is greater than a half. But the interpolated median is somewhere between 2.50 and 3.50. First we add half of the interval width

is greater than a half. But the interpolated median is somewhere between 2.50 and 3.50. First we add half of the interval width  to the median to get the upper bound of the median interval. Then we subtract that proportion of the interval width which equals the proportion of the 33% which lies above the 50% mark. In other words, we split up the interval width pro rata to the numbers of observations. In this case, the 33% is split into 28% below the median and 5% above it so we subtract 5/33 of the interval width from the upper bound of 3.50 to give an interpolated median of 3.35. More formally, the interpolated median can be calculated from

to the median to get the upper bound of the median interval. Then we subtract that proportion of the interval width which equals the proportion of the 33% which lies above the 50% mark. In other words, we split up the interval width pro rata to the numbers of observations. In this case, the 33% is split into 28% below the median and 5% above it so we subtract 5/33 of the interval width from the upper bound of 3.50 to give an interpolated median of 3.35. More formally, the interpolated median can be calculated from![{\displaystyle m_{\text{int}}=m+w\left[{\frac {1}{2}}-{\frac {F(m)-1/2}{f(m)}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/3c95b8278a436f17db1b875c1951c45698439209)

ConversionConversion EmoticonEmoticon